AI Research

Last weeks' steps

Spawning a separate object per player and posses it

The task’s purpose was to separate “hardcoded” player count to a more dynamic responding system. In the beginning, there was exactly one available player actor and this was possessed by the first joining player (the server itself, as we do not use a dedicated server architecture). Every other player that joined afterward got something like a default player actor, but that does not react to input and was stuck into the geometry.

Sidenote: “Actor” means a game object that is represented in the world and can be possessed. “Possess” means attaching an actor with some kind of input method. That could be the player’s controller or even an AI.

I separated them by defining some spawn positions and catching each joining player to create/spawn him one very own player actor. This also involves the very first network specific coding, as this procedure needs to be done by the server only. The actors are then replicated to the client on a very basic level.

Sidenote: “Replication” means copying the data of something over the network to enable other clients to perform the exact same results without performing the calculations on its own. This method ensures synchronized gameplay and prevents cheating.

In the End, each client can see each other moving around.

Getting the mobile development pipeline to work

This step was about installing all missing software components to be able to build for Android and even playtest the game on the device. This was a bit tricky as some of the information were outdated, misleading or just missing. These may be obvious for an experienced Android developer but not for me. Together with Daniel, we could get through it.

Testing the cross-platform network connectivity

This was the most important task of the four. It was a prove-of-concept if it is possible to create a build for Windows and another one for Android and still be able to connect both over a network into the same game. In the end, it was surprisingly easy and didn’t take any effort.

Getting more control over the network session by creating in manually

As I hated it to enter the IP-Adress all the time to establish a network connection, I looked for automation of that process. Now, the server creates a session and is listening to network broadcasts of searching clients. The session data is then used by the clients to connect to the game automatically.

Basic AI

The majority of my time went into AI research and testing. I wanted to have a basic understanding of AI Perception, Behavior Trees, Environment Queries, Black Boards, Tasks, Services, and Decorators. Without knowing what our actual AI should be able to perform, I started to develop a prototype that is capable of moving through the level and is kinda shooting at enemies. Build in methods of the Unreal Engine speed up that process and pushed me into new demands: What if the AI is not just running towards the player. Can it even look for some cover? It can! But, right now my AI is not able to perform different actions at the same time. It calculates the best location towards its enemy, a location where the AI is able to shoot and where half of its body is covered by geometry. Then it moves to that location and shoots at the enemy. But the moving action takes so much time, that the target moved potentially away towards a location, where the AI is no longer in cover or cannot even shoot at it. I need to perform calculations in between. But that is maybe a task for my next week. The following video demonstrates two AI’s running the above-described algorithm to fight each other. The Behavior Tree shows the execution of the red AI:

Friend and Foe

By experimenting with a lot of AI actors, I experienced some frame drops. This belongs to the fact, that each AI perceives every other AI and therefore integrates them into every calculation. My go-to-option was to include the GenericTeamAgentInterface and as this little thing is not usable via the Blueprint System, I needed to implement it in C++. Working with C++ in Unreal comes with a huge overhead of information that you need to know as a programmer. The whole engine is built around a lot of very specific macros to ensure compatibility between every aspect of the engine. I am glad to have that feature implemented, but I still don’t know what every line of code I wrote is actually doing. Nevertheless, this interface enables us to put every actor into different teams and every actor in the same team is excluded from a lot of operations. I am now able to run fifty AI actors without any performance issues.

Pathfinding

Working with more than one AI discovers some major problems with the pathfinding algorithm. Every AI calculates a perfect path to a destination and only moves on exactly this path. If two AI actors bump into each other at for example a corner, then they just look at each other and have no clue how to solve this situation. The following video demonstrates the problem until a major jam (each video includes 48 AI actors and their color changes from green to red representing the inability to move in time):

The “Detour Crowd Component” was the first solution and it helped a lot. The following video demonstrates how the AIs are able to solve all those corner situations on their own. But still, they are overstrained in doorways or hallways that do not provide enough space.

“Reciprocal Velocity Obstacle” is the next technique I simply activated. This method allows the AI to push each other around. It improves the overall performance as each of the jam is always in motion and by that potentially enables different actors to move through it. Here is a video that showcases that motion:

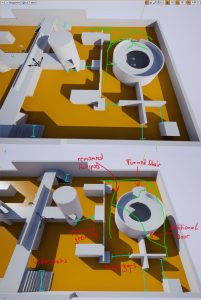

The last video also demonstrated how long it takes for each actor to pass that jam. The problem is, that this time is far longer than it takes for another actor to join the jam. Those jams could, therefore, last forever. There is only one option left, besides developing a very operation heavy algorithm, and that is level optimization. The following screenshots compare the old design with the new one. In general, I tried to remove hotspots, offer additional paths and ensure that each way is big enough that at least two actors can pass side-by-side, preferable three:

The results can be viewed in the following video:

Nevertheless, the number of AIs moving around is the problem. In each of the scenarios, there were 48! of them. Reducing the amount will, of course, reduce the risk of running into that problem. I learned a lot about pathfinding, ways to benchmark a level and optimize it.

We set the most valuable features for our very first playable:

First-Person-Player:

- grappling hook (attach to surfaces)

- pulling enemies

- energy resource management

- left thumbstick (move and strafe)

- right thumbstick (look and aim)

- jump

Mobile-Device-Player:

- spawns the enemies

Enemies:

- First Drone that shoots

- Second Drone with meele (electric), self-destructing or steal energy